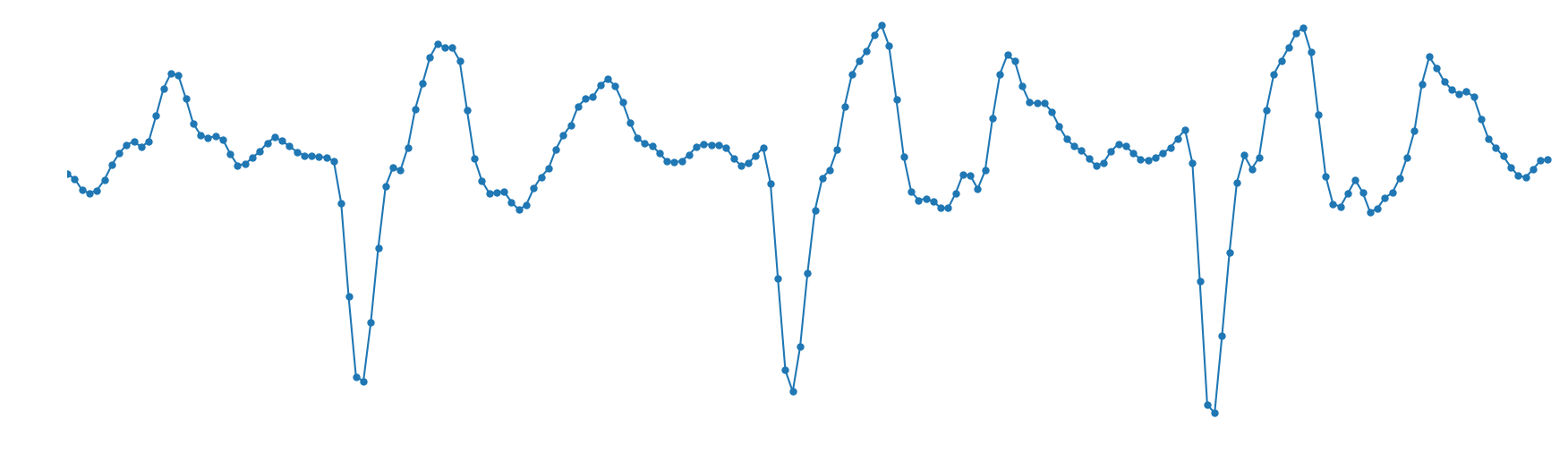

LPCNet is a new project out of Mozilla’s Emerging Technologies group — an efficient neural speech synthesiser with reduced complexity over some of its predecessors. Neural speech synthesis models like WaveNet have already demonstrated impressive speech synthesis quality, but their computational complexity has made them hard to use in real-time, especially on phones. In a similar fashion to the RNNoise project, our solution with LPCNet is to use a combination of deep learning and digital signal processing (DSP) techniques.

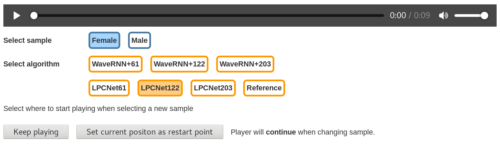

LPCNet can help improve the quality of text-to-speech (TTS), low bitrate speech coding, time stretching, and more. You can hear the difference for yourself in our LPCNet demo page, where LPCNet and WaveNet speech are generated with the same complexity. The demo also explains the motivations for LPCNet, shows what it can achieve, and explores its possible applications.

You can find an in-depth explanation of the algorithm used in LPCNet in this paper.

About Jean-Marc Valin

Jean-Marc Valin has a B.S., M.S., and PhD in Electrical Engineering from the University of Sherbrooke. He is the primary author of the Speex codec and one of the main authors of the Opus codec. His expertise includes speech and audio coding, speech recognition, echo cancellation, and other audio-related topics. He is currently employed by Mozilla to work on next-generation multimedia codecs.